Why does Quick Analysis give different scores for the exact same moves?

True, maybe that was a bad example. Why would it give different suggestions to the same move?

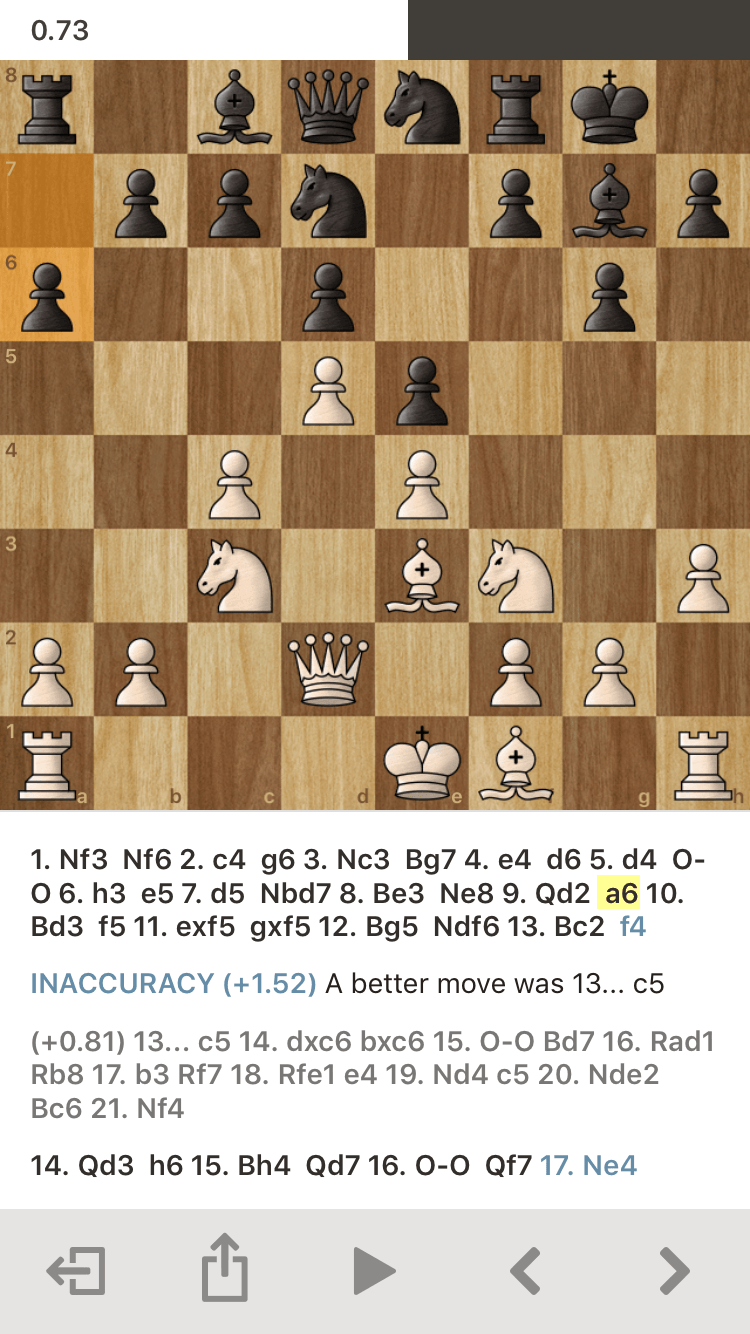

This move here (9. Qd2 a6) shows different scores (0.93 vs 0.73) and one is a blunder, while the other is not. The game is exactly the same though.

True, maybe that was a bad example. Why would it give different suggestions to the same move?

I guess - specially if you use the quick analysis - that some random lines are picked for deeper analysis and when a certain threshold is reached then the line is selected. Try to analyze the games with a greater depth and see if there is still a difference.

I don't know how many ply are used - does chess.com vary the ply depending on the speed of the user's computer/phone?

In any case, the differing numbers and best moves remind me of the differences I get with Stockfish 8 on Lucas Chess when I set it to 12 ply (a few seconds/half move on my computer) vs 20 ply (about 70 seconds/half move) or when I use the abbreviated-but-deep engine, Deepfish 7.

Have you ever used an engine?

As it thinks, the eval constantly changes. The longer it thinks, the less it changes... but you can also e.g. clear the hash and try it again and get slightly different numbers even after long thinks.

If the #1 and #2 best moves are very close in evaluation, then even after long thinks the same engine on the same machine can suggest different moves.

I assume chess.com analysis dose a whole game very quickly. For good analysis you'd want at least something like 30 seconds per half move. For a 40 move game that would take 40 minutes. And for difficult positions Ideally you do much longer and the human would assist the engine exploring useful moves.

---

Long story short: it's a quick and dirty analysis.

Based on my reading of Stockfish (which may operate similar to chess.com engine), the engine does not start fresh after each board position to evaluate the next. Therefore, if you arrive at the same board position in different ways, the engine may very well have different internal states and hence different scores. (I'm assuming your previous 37 moves weren't the same)

Try this, just before evaluating that 38th move, issue UCI new game command and ask for eval, i'd bet it'll give you the same scores.

I've read that chess.com uses stockfish as their chess engine (can't confirm this for sure though).

Then, really, it should get the same score.

There's also the possibility of machine architecture differences in floating point arithmetic (and even more in software implementations). Also if chess.com is running in a virtualized computing environment, there may be processing limits imposed/bugs/just different HW/different stockfish software/whatever that create small differences that triggered a different search branch (and hence the large different result).

I'm assuming that stockfish is set to depth and not time limit because it is already hard to compare results on time limit in real machines; nearly impossible for VM.

Instead of guessing at chess.com's engine or what platform it is run on (or even which arithmetic library it's compiled against), have you tried it on stockfish?

If you have an Android tablet, you can try entering the moves into

https://play.google.com/store/apps/details?id=com.adamtai.analysisboard

It'll give you stockfish analysis each every move (white/black), look for the "CP", which is the score and find where it starts to deviate.

Compare that to a PC's stockfish result or use that to check chess.com's result. Android is supported on MIPS, Intel, and ARM, so we might even get a taste of different architectures just from the members here.

If you have an Android tablet, you can try entering the moves into

https://play.google.com/store/apps/details?id=com.adamtai.analysisboard

It'll give you stockfish analysis each every move (white/black), look for the "CP", which is the score and find where it starts to deviate.

Compare that to a PC's stockfish result or use that to check chess.com's result. Android is supported on MIPS, Intel, and ARM, so we might even get a taste of different architectures just from the members here.

I don't have an Android tablet, but I'll try this with Stockfish for Mac. Thanks!

My friend and I send each other screenshots of our games sometimes and we noticed that the Quick Analysis will give each of us a different score for the same move. Does anyone know why/how this happens? Even with the horizon effect, shouldn't the score be the same given the same input?

Example:

Blunder (-7.92) the best move was 38. Kf2

vs. Blunder (-8.95) the best move was 38. Kg2