Deep engine analysis; compared to GM play and human appreciation

Modern engines on fast computers play so much better than human grandmasters that we should expect a decent percentage of computer moves to be humanly incomprehensible. I remember when I first tried going through some of Bobby Fischer's games, and I couldn't believe the crazy moves he (and his GM opponents) were making. At first I kind of wondered, is this a joke? Some of those moves looked flat out terrible! Because they were way over my head, and I couldn't understand much of what either side was trying to accomplish. GMs play at a completely different level from beginners / novices who are mainly concerned with the absolute basics, like getting their pieces out and making sure everything is protected. When Bobby Fischer makes a deep positional sacrifice, it's going to look nonsensical from that point of view. Human GMs trying to understand games between modern chess engines are in somewhat the same position.

The main issue with computers in chess is that if you're not a half decent chess player yourself, you may come to all sorts of erroneous conclusions about them.

*Stockfish is much stronger than a GM, even at 20 plies. There is just no comparison, and on deeper searches, it's even much stronger. I have no clue where you get that Stockfish is "pretty weak" in any area of the game.

*A low depth search (5 plies or something) is really terrible for any engine. But no one needs to care about that, since Stockfish can go to 15+ plies in half a second.

*Stockfish, like all engines, doesn't play on "intuition". The only thing you can do to explain its moves is to understand the variations (the important ones at least), and understand the critical evaluations. There are so many variations it calculates that a human just won't be able to do it. The evaluation algorithm is impossible to emulate, so humans can just use their positional understanding.

*Going deep into Stockfish analysis is completely useless at your level, and even at much higher levels. I would say that overall, an engine is only good for checking your blunders and your ideas. You need to use your brain to think first, before consulting Stockfish, for best effect. Otherwise you just parrot out some engine moves.

*Stockfish evaluates based on board position, like pretty much every other chess engine. However, I think there are some specific algorithms that cause it to evaluate in the middle of a tree of variations, and tells it if it's worth extending a certain line past the depth that it's calculating other llines. I'm not sure of this, but it's an extremely powerful tool to see deeper tactics quickly.

*All good engines will be very difficult to understand in general, I'd say.

I've been playing around with Stockfish a bit. Of course, one of the frist things I did was to test it against my own chess engine (one that I wrote a while back for fun). Here are some interesting observations:

My chess engine plays like me (mainly because I wrote it without knowing anything about engine programming, so I programmed to work like what I think I'd do). At its center is a heuristic movement generator that attempts to force/entice/wish/move-towards the closest/best board positions using tactics. In the opening and somewhat ok in the end game. The mid game is weak as there are many branches.

Stockfish evaluates based on board position, which allows it deeper searches (in acceptable search space, unlike my program where after 2-3 plys the searchable branches hyper-exponentially explodes for a good description of its desperation : ) This also means that Stockfish may make moves that seem very illogical until (and requiring it's assumed countermoves) several plys later, at which time, the original position score may be very far from correct (due to different counter moves). This makes it hard for a person to follow, e.g. Stockfish moves A in ply 10, then it makes a nice move B in ply 20, there's not much link between A and B, i.e. is it because of A that leads to B? 10 plys took place between.

For my engine, I can almost see why it's doing what it is doing, kind of like looking at a beginner match, ok, the knight goes there to try to move into a fork position, or bishop is trying to exchange for a rook; and due to its "drive" towards pre-set positions. For Stockfish, when depth goes above 20 (I only use 20 because that's the depth I've been looking at mostly, but I suspect, based on some observations, the cut off is much lower, at around 5, I've put together an example app for the curious), it becomes very hard for me to understand why a move was made.

For those who know more, would you say stockfish plays like a grandmaster when set to a deep search? (Low ply search, Stockfish seems pretty weak except when there's few pieces on the board). I.e. if somebody were to comment on its moves, would it be human-logical such that here's a great move, setting up for x and y, here's a risky move (yes, I know there's no risk/blunder/bluff in stockfish, but maybe the human commentator can read more into it to interpret it for better human-understanding).

I'm wondering (and I didn't get much response in another of my post, so this might be a slight duplication), if going deep in stockfish analysis is at all useful in terms of a human learning/improving at chess.

Ah, this post it already too long. Thanks in advance.

I've found that the biggest difference in what I think is a good move and Stockfish thinks is an inaccuracy or blunder is that I'm following a rule-of-thumb that is going to certainly lead me to a win or material gain and Stockfish hates it because my choice takes more moves to achieve mate or gain compared to the shortest route it calculated.

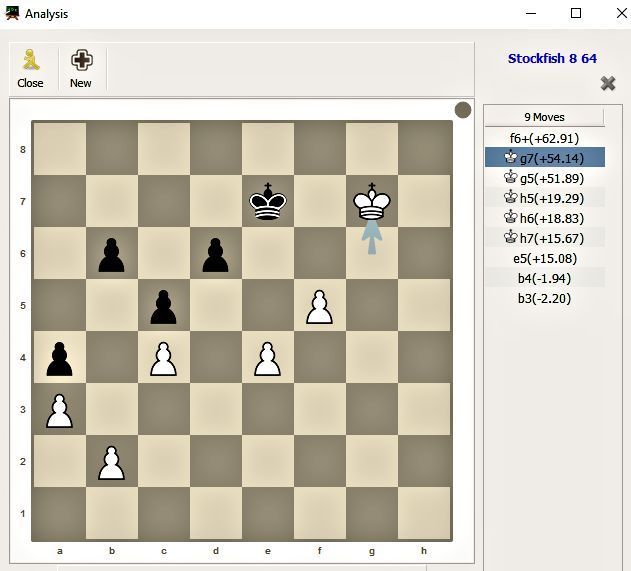

Here's a recent game of mine where Stockfish (both on the deepest chess.com analysis and on 20-ply using Lucas Chess as shown) considered my move Kg7 an "inaccuracy" despite the fact every endgame book would tell you that keeping the Opposition and insuring your 5th-rank Pawn will Queen with Kg7 is the way to go. Stockfish's preferred f6+, probably chosen because it probably leads to the fastest possible mate with all-best moves, would have resulted in a Black K at f8 in a stalemate-like position and I would have had to create a queen with another passed pawn, watching carefully he always had a move to make:

Can we try your engine?

Currently it runs as a compiled program with a text interface where you must enter every move in FEN (basically you enter the board position each time and it outputs the next board position). I wrote it before I knew about UCI ; actually before I knew much about chess engines (and their separation with GUI, etc.).

I had plans to put my engine into an app, but that was before I learned about Stockfish. Now that is low priority (actually no priority) since I've integrated Stockfish into my app.

I've been playing around with Stockfish a bit. Of course, one of the frist things I did was to test it against my own chess engine (one that I wrote a while back for fun). Here are some interesting observations:

My chess engine plays like me (mainly because I wrote it without knowing anything about engine programming, so I programmed to work like what I think I'd do). At its center is a heuristic movement generator that attempts to force/entice/wish/move-towards the closest/best board positions using tactics. In the opening and somewhat ok in the end game. The mid game is weak as there are many branches.

Stockfish evaluates based on board position, which allows it deeper searches (in acceptable search space, unlike my program where after 2-3 plys the searchable branches hyper-exponentially explodes for a good description of its desperation : ) This also means that Stockfish may make moves that seem very illogical until (and requiring it's assumed countermoves) several plys later, at which time, the original position score may be very far from correct (due to different counter moves). This makes it hard for a person to follow, e.g. Stockfish moves A in ply 10, then it makes a nice move B in ply 20, there's not much link between A and B, i.e. is it because of A that leads to B? 10 plys took place between.

For my engine, I can almost see why it's doing what it is doing, kind of like looking at a beginner match, ok, the knight goes there to try to move into a fork position, or bishop is trying to exchange for a rook; and due to its "drive" towards pre-set positions. For Stockfish, when depth goes above 20 (I only use 20 because that's the depth I've been looking at mostly, but I suspect, based on some observations, the cut off is much lower, at around 5, I've put together an example app for the curious), it becomes very hard for me to understand why a move was made.

For those who know more, would you say stockfish plays like a grandmaster when set to a deep search? (Low ply search, Stockfish seems pretty weak except when there's few pieces on the board). I.e. if somebody were to comment on its moves, would it be human-logical such that here's a great move, setting up for x and y, here's a risky move (yes, I know there's no risk/blunder/bluff in stockfish, but maybe the human commentator can read more into it to interpret it for better human-understanding).

I'm wondering (and I didn't get much response in another of my post, so this might be a slight duplication), if going deep in stockfish analysis is at all useful in terms of a human learning/improving at chess.

Ah, this post it already too long. Thanks in advance.